Pipelines

Pipelines provide fine-grained control over log event processing. They consist of topics (which filter events) and pipelines (which process the filtered events through a series of steps). By mixing and rearranging pipeline steps, you can control how your events are transformed before being sent to their destinations.

Getting Started: Navigate to Pipelines in RunReveal to create your first topic and pipeline, or manage existing ones.

Pipeline Architecture

Event Flow

- Events arrive from your configured sources

- Topics filter events based on preconditions

- Pipelines process filtered events through steps

- Events are sent to their final destinations

Topics: Event Filtering

Topics apply filters to select subsets of events before they enter a pipeline. They use preconditions to determine which events get routed to specific pipelines.

Topic Preconditions

Topics use preconditions to determine which events get routed to specific pipelines:

- Source-based: Route events from specific sources (e.g.,

webhooksources) - Field-based: Route events based on field values

- Custom criteria: Any combination of filtering conditions

Managing Topics

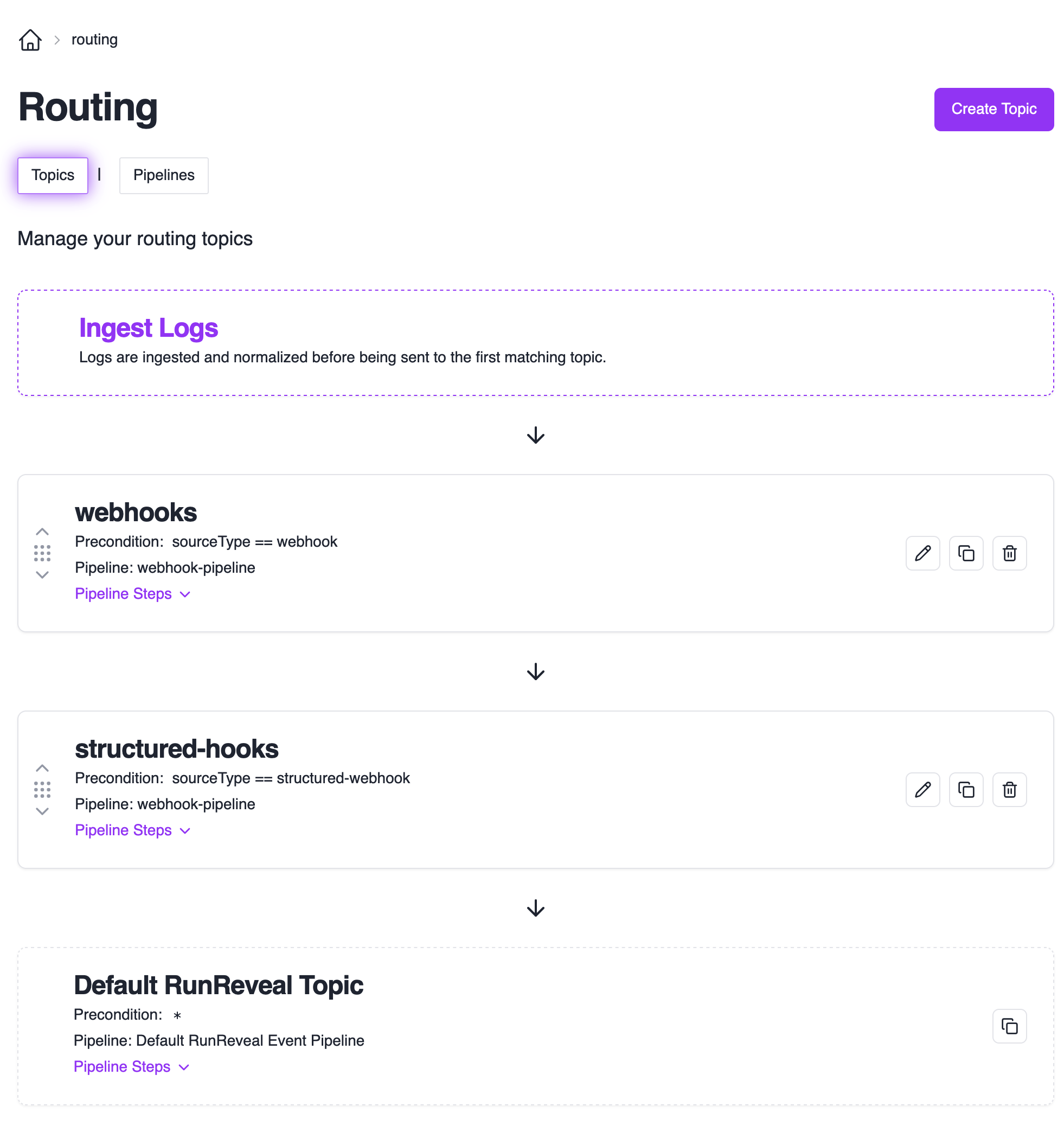

To manage your topics, navigate to the Pipelines Page.

Topic Evaluation Order:

- Events are evaluated against topics from top to bottom

- Events that don’t match any custom topics go to the default RunReveal pipeline

- Topics can be reordered by dragging them up/down in the list

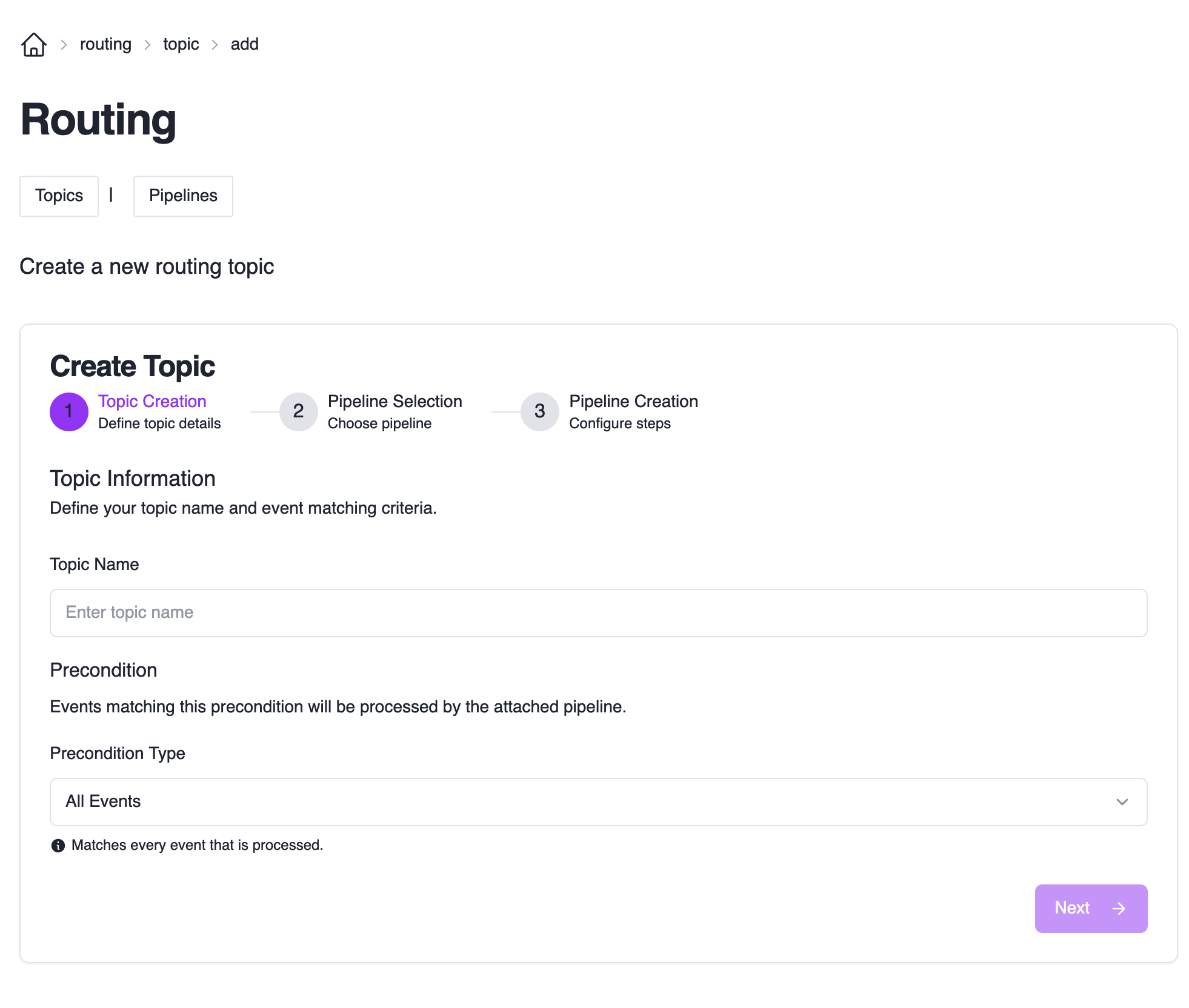

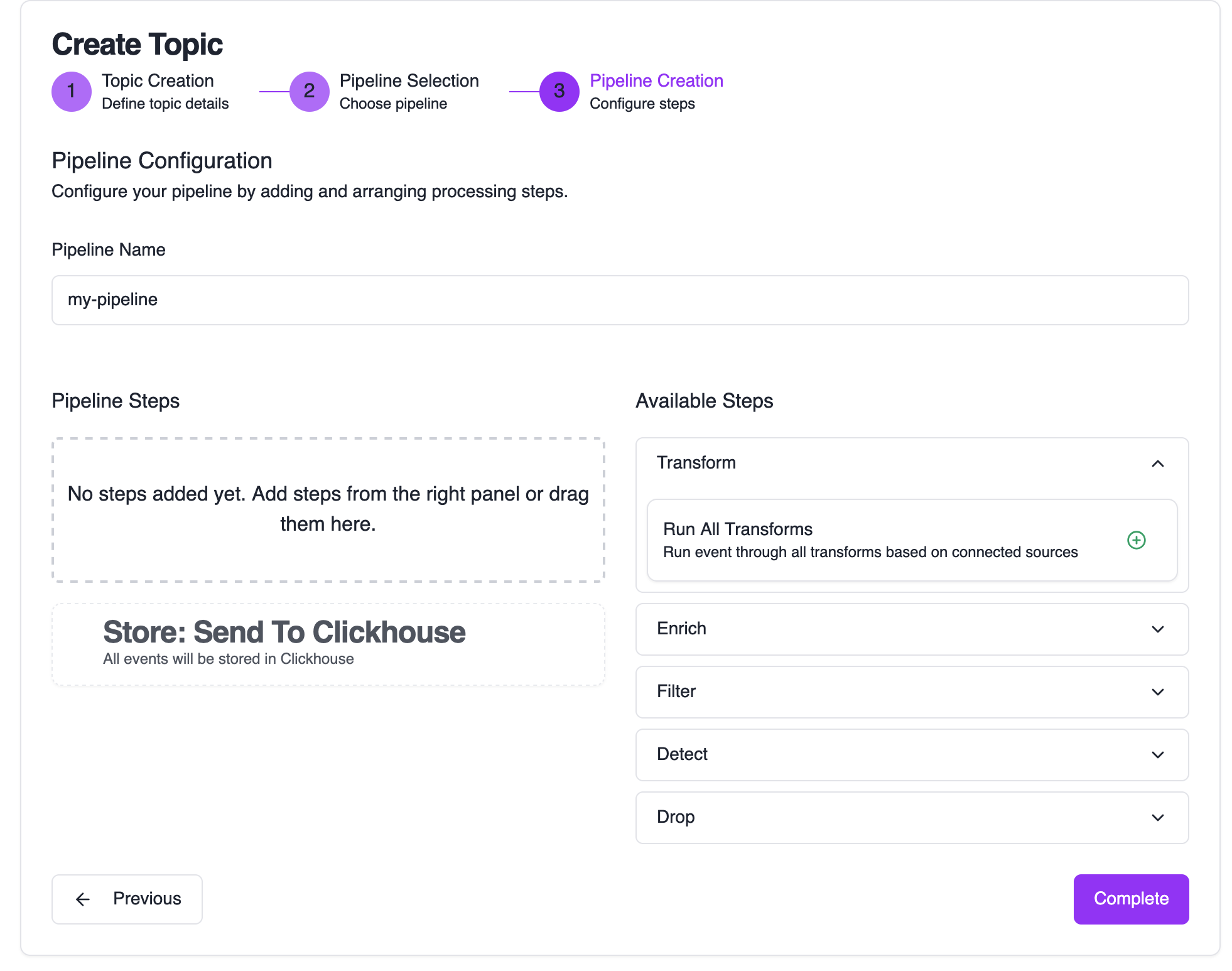

Creating a Topic

Start Topic Creation

Click the “Create Topic” button to open the topic creation wizard.

Configure Topic Details

Each topic needs a name and a precondition. The precondition determines which subset of events will be routed to your topic.

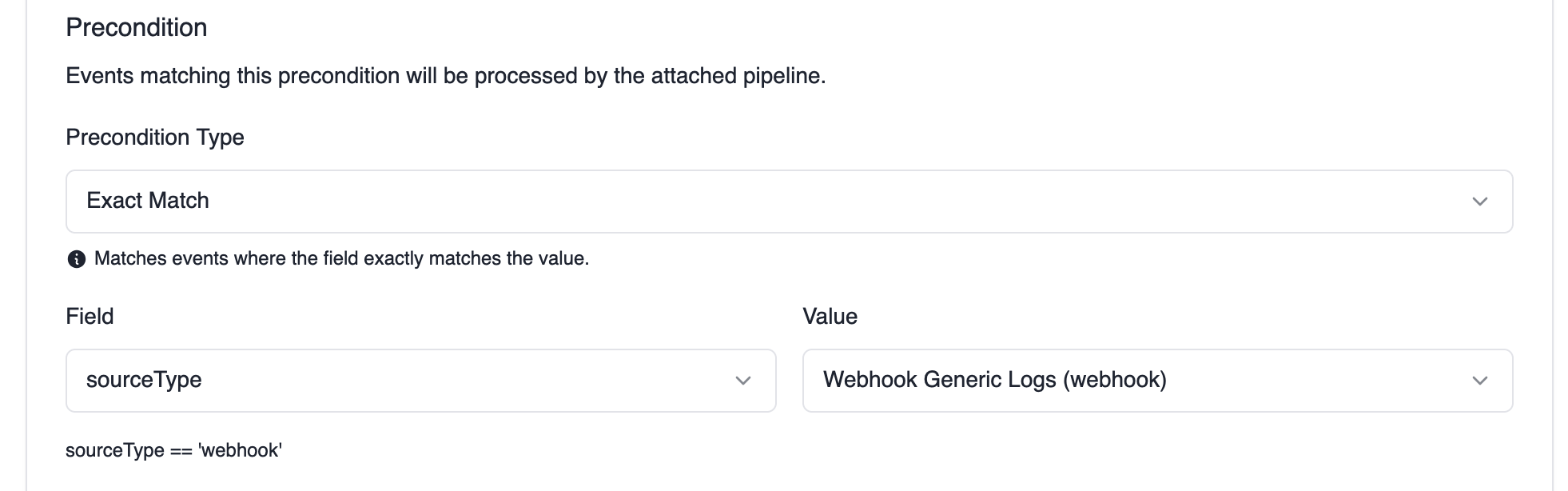

Example: The precondition above matches any events coming from webhook sources.

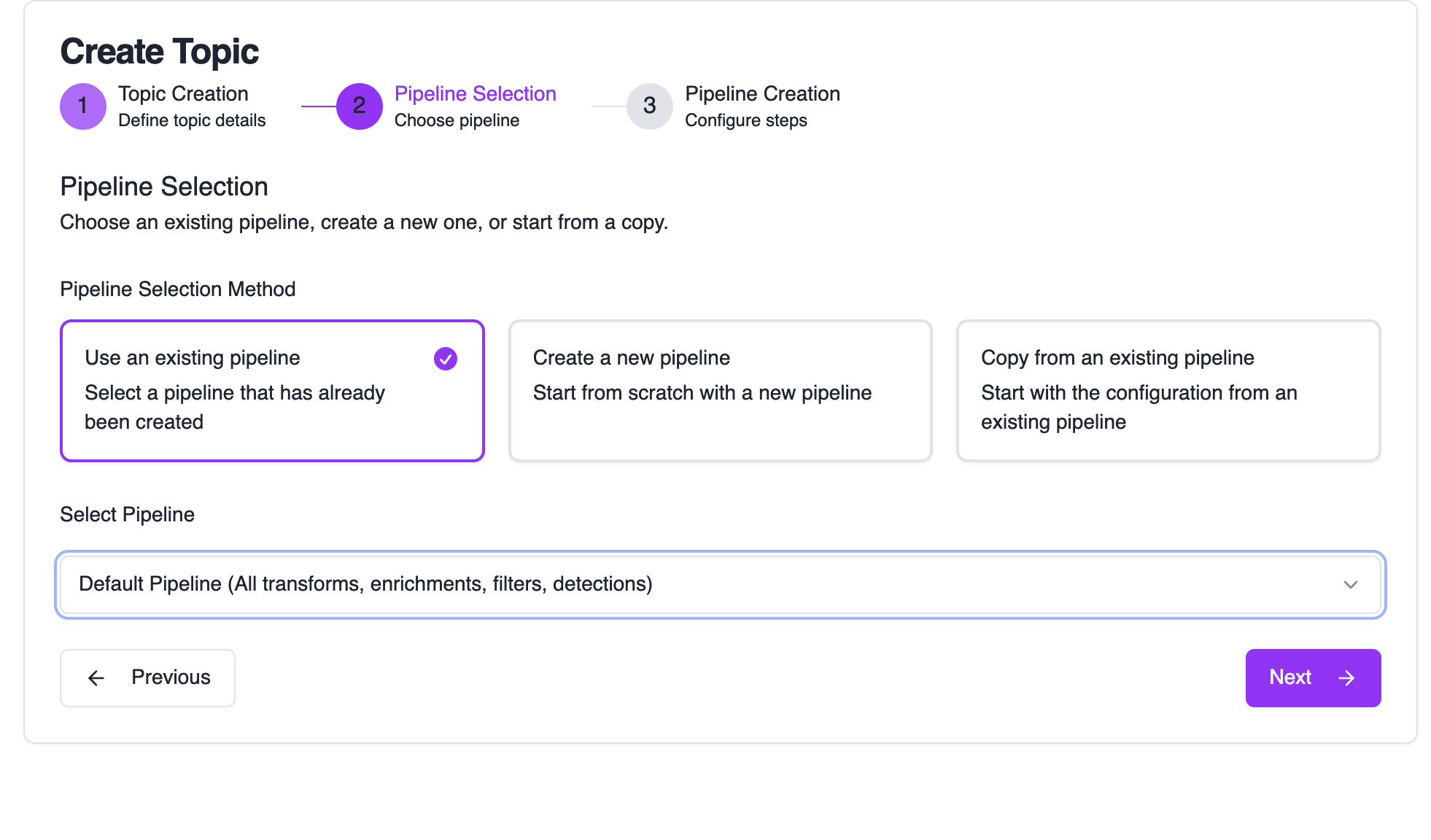

Choose Pipeline Configuration

Configure where your matching events will be processed:

Options:

- Use an existing pipeline: Reuse pipelines across multiple topics

- Create a new pipeline: Start with a fresh pipeline from scratch

- Copy from an existing pipeline: Build on top of existing processing

Configure Pipeline Steps

You’re brought to the pipeline editor to configure how events are processed.

Click Complete to finish the wizard and set up your new resources.

Pipelines: Event Processing

Pipelines detail how events are processed before being sent to their final destinations. They consist of steps that are evaluated in order from top to bottom. Each step includes a function to apply and a precondition to select which events the step applies to.

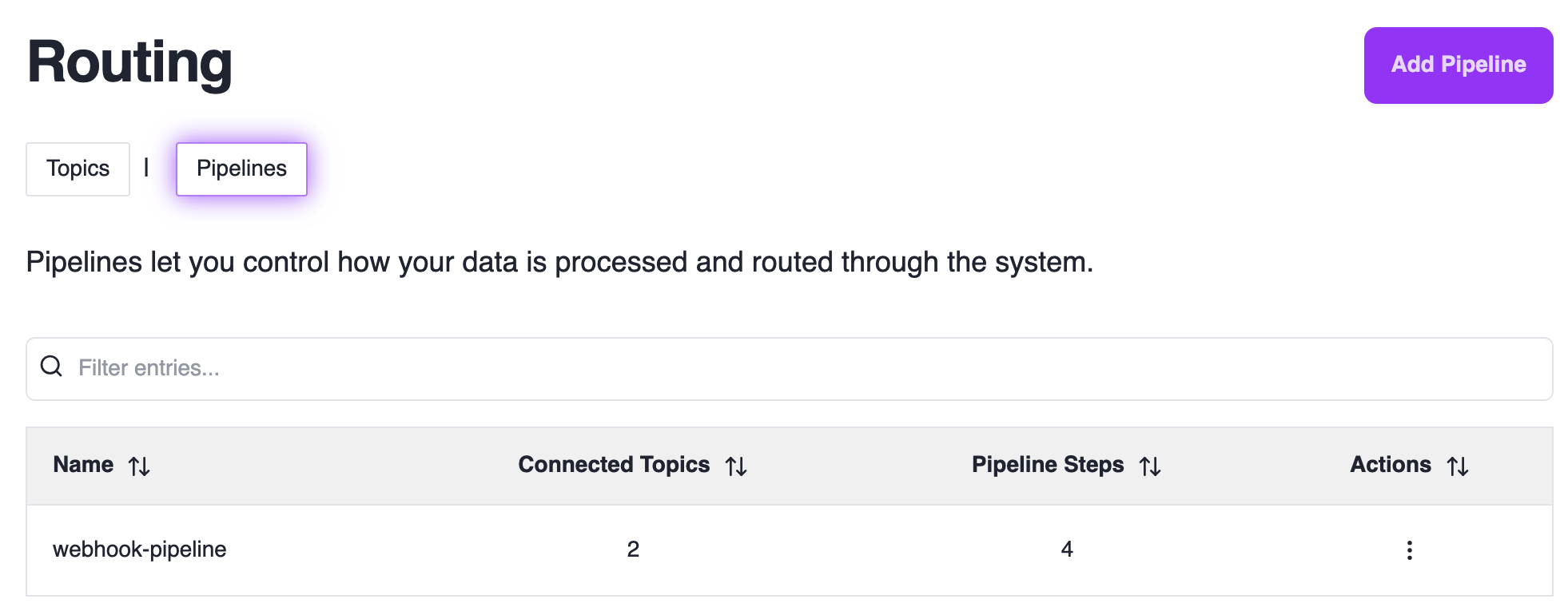

Creating a Pipeline

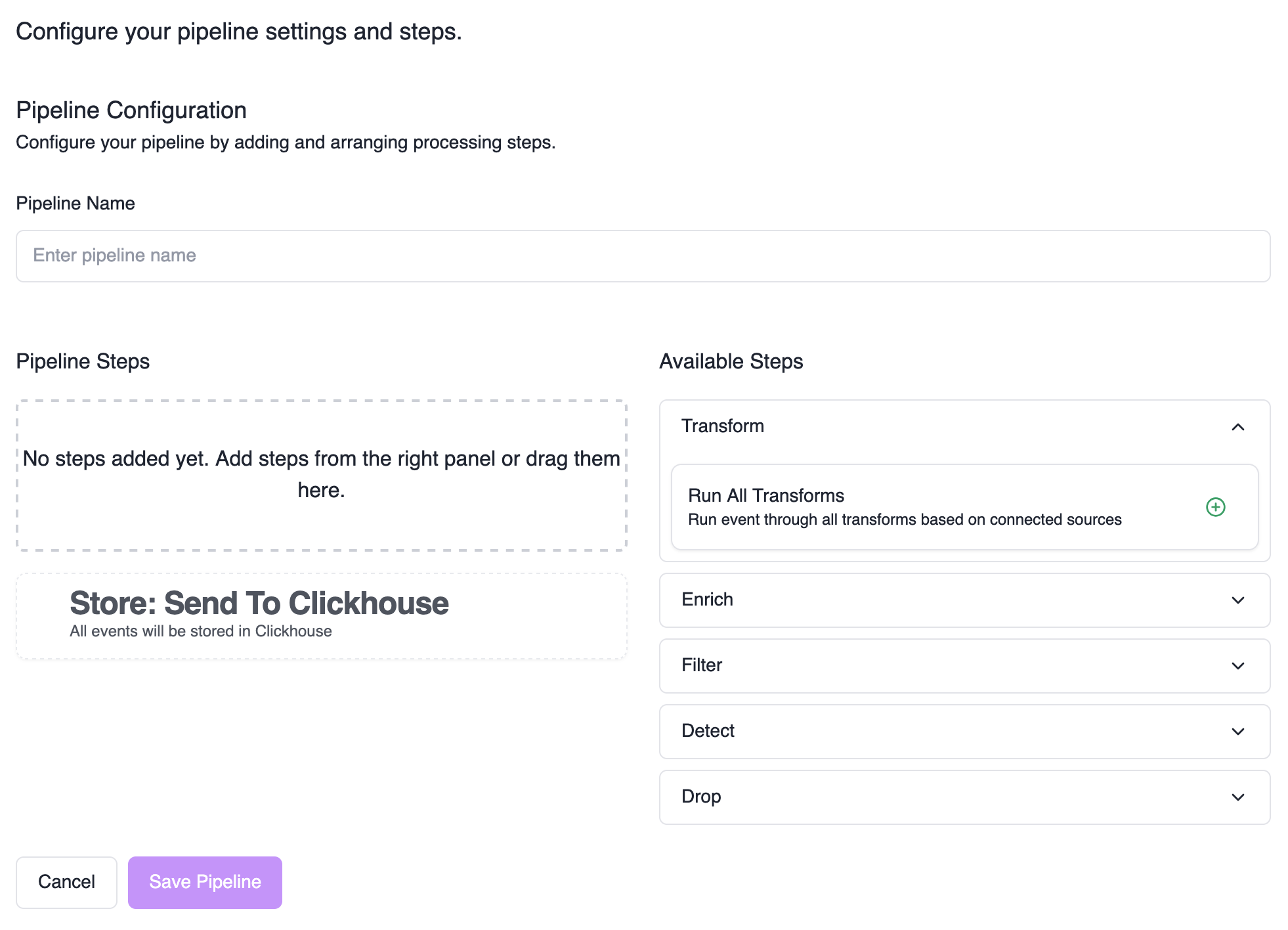

Access Pipeline Editor

Click “Add Pipeline” or edit an existing pipeline to open the pipeline editor.

Build Your Pipeline

- Left column: Your pipeline steps

- Right column: Available steps to add

- Drag and drop: Add steps from right to left column

- Reorder: Drag steps up/down to change evaluation order

Configure Step Preconditions

Each step can have preconditions to determine which events it applies to.

Shared Pipeline Warning: When editing a pipeline shared between multiple topics, you’ll be prompted to unlock it first. Changes affect all matching topics - proceed with caution.

Processing Steps

Pipeline steps are executed in order from top to bottom. Each step can have preconditions to determine which events it applies to.

| Step | Purpose | When to Use |

|---|---|---|

| Transform | Modify event data structure and content | • Standardizing data from different sources • Cleaning up field names • Restructuring JSON |

| Enrich | Add additional context to events | • Enhancing events with contextual information for better analysis |

| Filter | Apply reusable filter rules | • When you need reusable, configurable filtering rules that can be shared across pipelines • When you want to archive events instead of dropping them |

| Detect | Run streaming detections (Sigma rules) on events as they are ingested | • Real-time threat detection as events are ingested |

| Sample | Reduce event volume by sampling a percentage | • Cost optimization for noisy sources • Performance tuning |

| Drop | Remove events from processing | • Simple, pipeline-specific event dropping that doesn’t require reusable filter rules |

Precondition Types

Preconditions are used by topics and pipeline steps to determine which events they apply to. All preconditions use the same matching logic.

Field Targeting

Standard Fields: Select from the dropdown for normalized (parsed) fields (e.g., normalized.actor.email, normalized.src.ip, normalized.eventName).

Custom Fields: If a field is not a normalized field, you can target it in the ‘rawLog’ by selecting other as the field type, then entering the field path directly:

- Top-level fields: Enter the field name (e.g.,

Namespace) - Nested fields: Enter the GJSON path (e.g.,

request.namespaceorevents.0.parameters.#(name="client_id").value)

Google Workspace Integration: Processes logs through Google Admin SDK, which changes the JSON structure of rawLog. Use GJSON paths or regex on rawLog for reliable targeting. Field paths may not match original audit log structure.

Use Cases and Examples

Explore real-world scenarios for each pipeline step. Select a step type to see relevant examples with precondition configurations.

Combining Preconditions: Add multiple preconditions to the same step to create AND conditions (all must match). Add multiple steps of the same pipeline step type to create OR conditions (any can match).

Example: AND vs OR Logic

Drop events where actor.email = [email protected] AND src.ip = 10.0.0.0/8

Step 1: Drop where actor.email = [email protected]

Step 2: Drop where src.ip = 10.0.0.0/8