Native AI Chat

RunReveal’s Native AI Chat is a purpose-built investigation agent that lets you analyze your security data conversationally, directly inside the RunReveal console. It executes complex queries, analyzes patterns, and guides you through investigations—all without your data ever leaving the platform.

What is Native AI Chat?

Native AI Chat is fully integrated into the RunReveal UI, giving you a secure, auditable, and persistent way to investigate logs and detections using natural language. Unlike external chat clients, Native AI Chat runs entirely within your workspace, leveraging your own API keys for model access.

Why Native AI Chat?

- Direct data access: Query logs, examine table schemas, and analyze security data in real-time using the same APIs that you already use.

- Transparent reasoning: Every action is explained and auditable. See exactly why a query was run or a tool was used.

- Persistent context: The chat remembers your investigation history, enabling complex, multi-day investigations.

- Secure by design: Your data never leaves the RunReveal platform. All model calls are made server-side, using your own API keys.

What Can You Do with Native AI Chat?

- “Show me all failed login attempts from the last 24 hours”

- “Find privilege escalation events in the past week”

- “What IP addresses had the most failed authentication attempts?”

Common Use Cases

“I need to investigate a suspicious login from IP 192.168.1.100” → Agent analyzes login patterns, cross-references with threat intel, suggests next steps

Supported Model Providers

Native AI Chat supports multiple LLM providers. Workspace admins can add API keys for any or all of the following:

Each model runs securely and data never leaves the RunReveal platform.

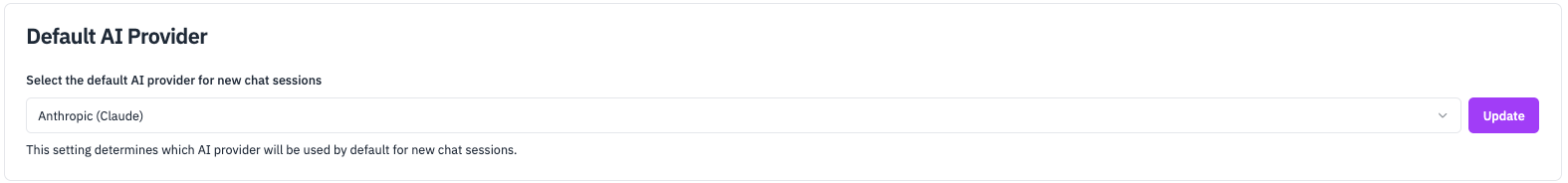

Admins can now configure multiple providers and set a default provider for the workspace. When starting a new chat, users can select from any configured provider. Once a provider is selected for a chat session, it is fixed for that session, but you can start a new chat with a different provider at any time.

Setup Guide: Native AI Chat

Prerequisites

- A RunReveal account with admin or API access

- API key(s) for your preferred LLM provider(s): Anthropic (Claude), OpenAI (ChatGPT), Google AI (Gemini), or an IAM role if using Amazon Bedrock (AWS)

- Access to your RunReveal workspace settings

Step 1: Open AI Model Providers Settings

- In the RunReveal UI, go to Workspace Settings → AI Model Providers

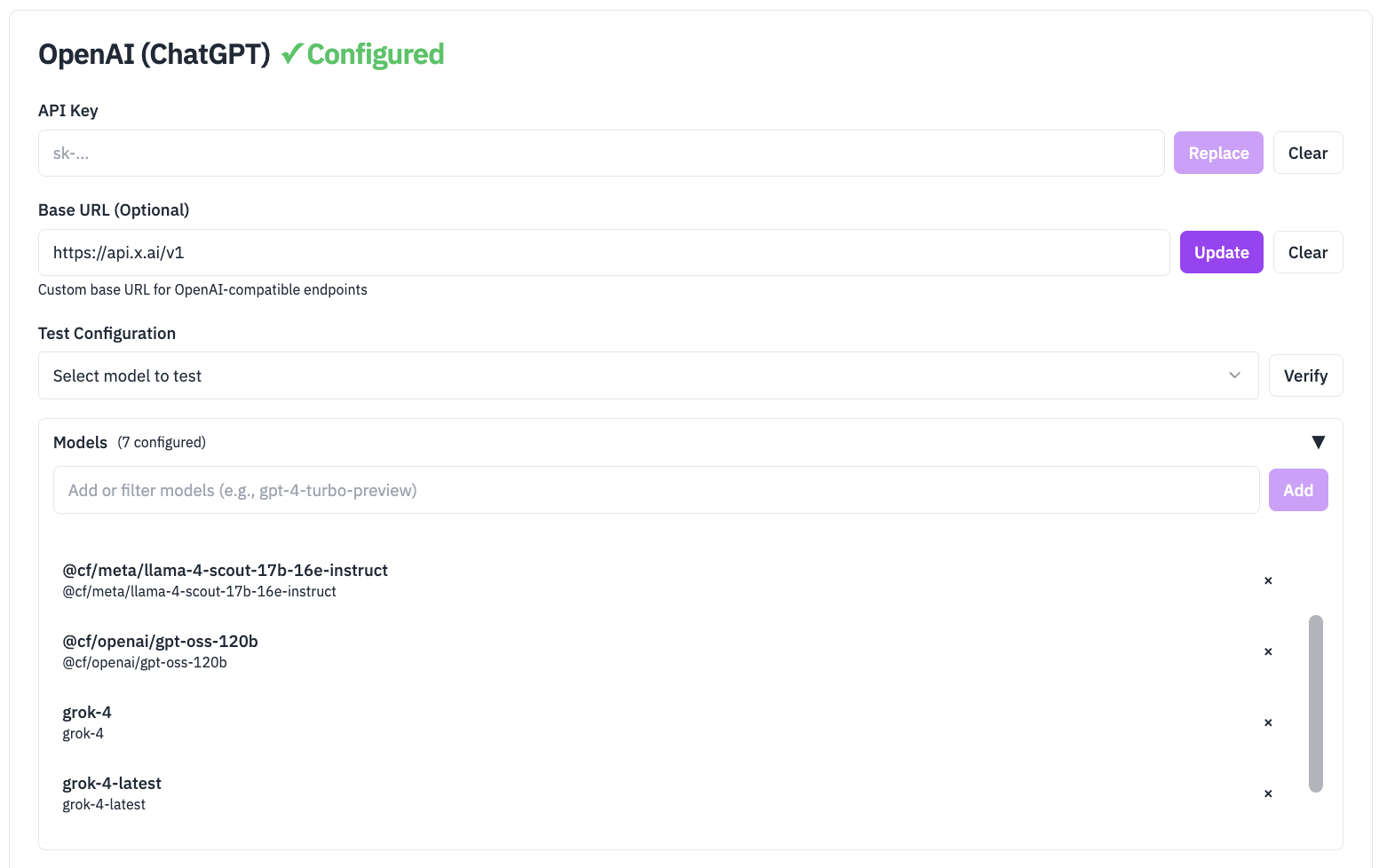

Step 2: Add Your LLM API Keys and Base URL (If applicable)

- Add API keys for any providers you want to enable (Anthropic, OpenAI, Google AI, or Amazon Bedrock).

- Input base url to configure openAI compatible models (If using OpenAI compatible models like grok https://api.x.ai/v1 or cloudflare ai https://api.cloudflare.com)

- You can add multiple providers; you do not need to remove unused keys.

Step 3: Set a Default Provider (Admin Only)

- In the provider settings, select which provider should be the default for your workspace.

- This provider will be pre-selected for new chat sessions, but users can change it.

Step 4: Start Chatting and Select Provider

- When starting a new chat, you’ll see a dropdown to select from any configured provider.

- Once you select a provider/model, it will be used for the duration of that chat session.

- To use a different provider, simply start a new chat and select a different provider.

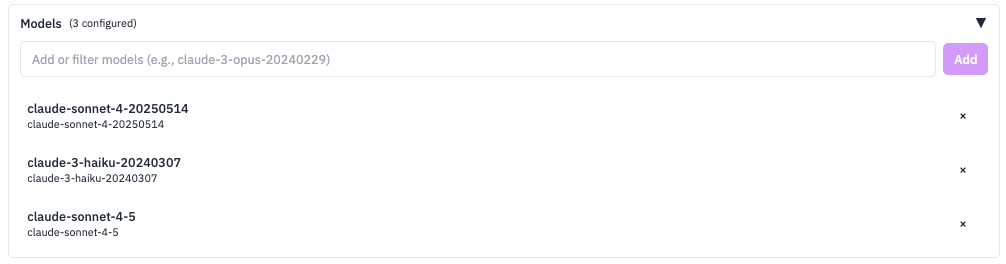

Add Models

Add your favorite or custom model for your provider by name in the “Models” input and clicking “Add”. For example, you can add claude-sonnet-4-5-20250929 to use the latest Claude Sonnet 4.5 model. See the Claude models overview for available model names.

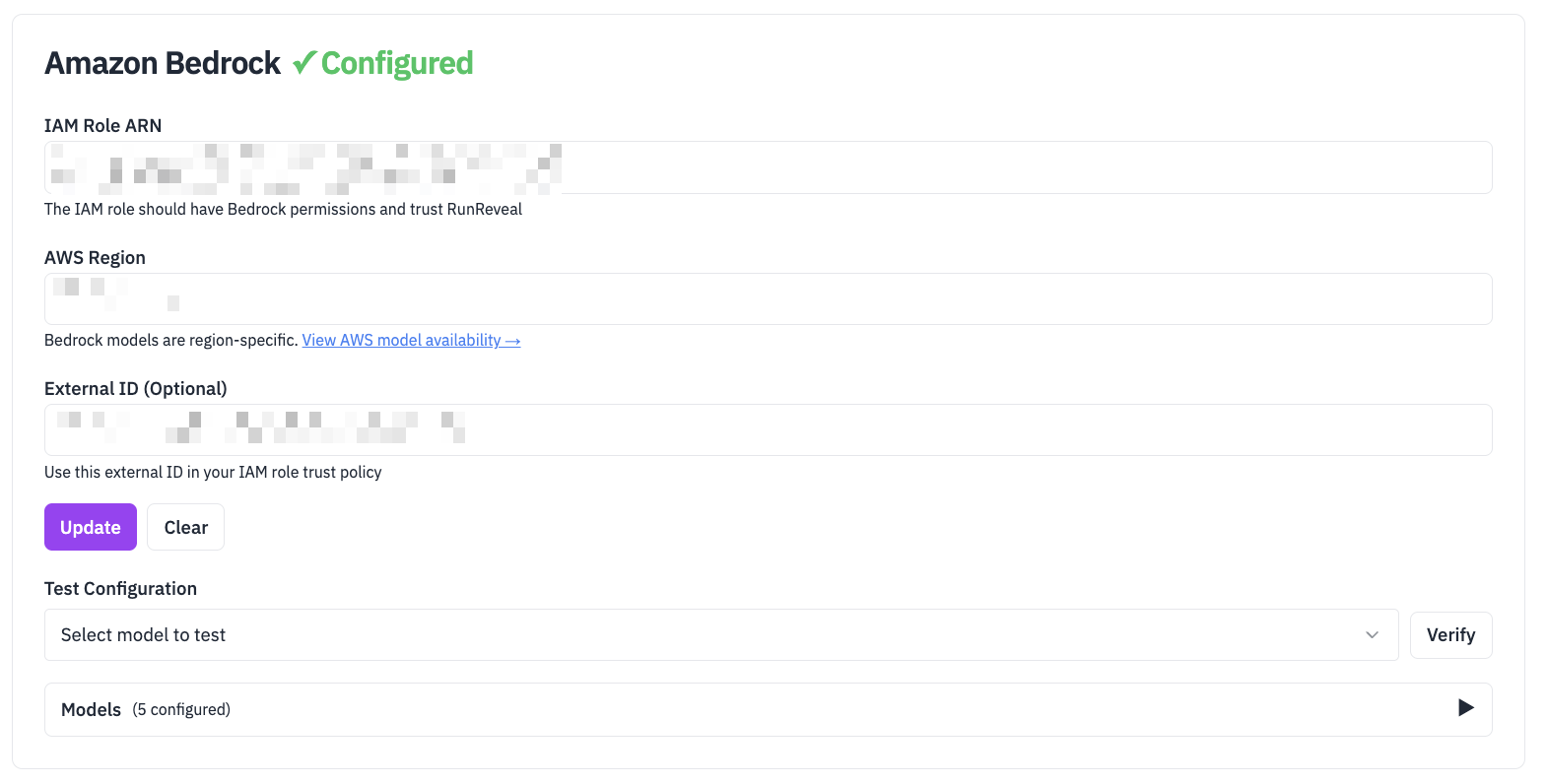

Amazon Bedrock Setup

Amazon Bedrock requires AWS IAM role configuration instead of API keys. Follow these steps to set up Bedrock access:

Important Notes:

- Configuration: Uses role ARN instead of API keys with external ID for security

- Model Selection: Currently limited to Claude 3.5 Sonnet (requires Bedrock console access)

- Regional: Single-region inference only - specify region during configuration

Step 1: Configure Bedrock in RunReveal

- In the RunReveal UI, go to Workspace Settings → AI Model Providers

- Click Configure next to Amazon Bedrock

- Input the arn of your IAM role and an External ID that you will add to your IAM role. If you’ve already configured a role for RunReveal to assume for reading S3, you can re-use that same role and External ID here after you’ve attached the bedrock access policy below.

Step 2: Create IAM Policy for Bedrock

- Attach the following policy to your IAM role:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"bedrock:InvokeModel",

"bedrock:InvokeModelWithResponseStream",

"bedrock:ListFoundationModels"

],

"Resource": "*"

}

]

}- Optional: For better security, you can restrict the policy to specific model ARNs instead of using

"Resource": "*"

Step 3: Create IAM Role in AWS

If you’ve configured a more general for role for RunReveal to assume, you can attach the policy to that role and skip this step.

- Log into AWS Console and navigate to IAM

- Create a new role with the following configuration:

- Trusted entity: AWS account

- Account ID: Use the account ID shown in the RunReveal AWS Bedrock configuration

- External ID: Use the External ID shown in the RunReveal AWS Bedrock configuration

Trust Relationship Configuration

The trust relationship is a critical security component that controls which AWS account can assume your role. We recommend using an External ID to prevent unauthorized access.

Example Trust Policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::253602268883:root"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": "your-unique-external-id-from-runreveal"

}

}

}

]

}Key Components:

- Principal: The AWS account that can assume this role (RunReveal’s account)

- Action:

sts:AssumeRoleallows the role to be assumed - Condition: The External ID must match exactly what’s shown in the RunReveal form

Learn More: For detailed information about trust relationships and External IDs, see the AWS IAM documentation on trust policies and External ID best practices.

Step 4: Attach policy to role

You’ll need to attach the bedrock policy created in step 2 to the role.

Step 5: Get Role ARN

- After creating the role, copy the Role ARN (format:

arn:aws:iam::ACCOUNT-ID:role/ROLE-NAME) - Paste the Role ARN into the RunReveal Bedrock configuration form

- Click Save to complete the setup

Step 6: Test Bedrock Access

- Start a new chat in RunReveal

- Select Amazon Bedrock from the provider dropdown

- Ask a test question to verify the connection works

Security Note: The External ID on the AWS Bedrock form is unique to your workspace and helps prevent unauthorized access. Always use the exact External ID shown in the modal when creating your IAM role. See the Trust Relationship Configuration section above for detailed setup instructions.

Usage

Once set up, you can ask:

- “Show me all failed login attempts from the last 24 hours”

- “What detection rules do we have for privilege escalation?”

- “Create a new detection for suspicious file downloads”

- “What tables contain network traffic data?”

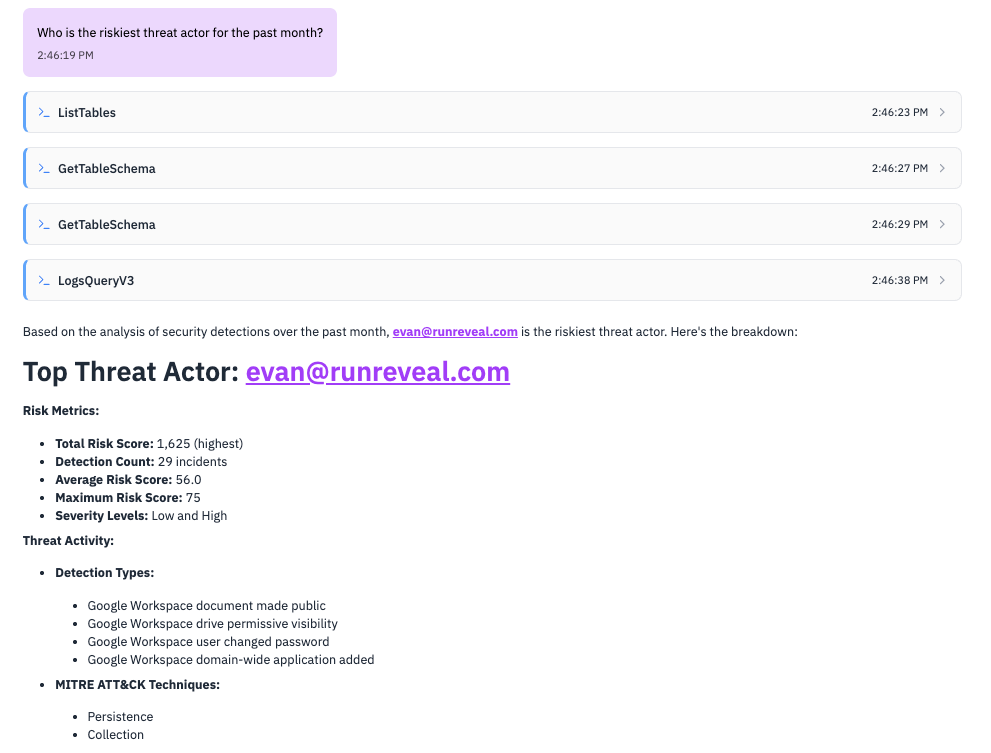

Native AI Chat responding to investigation prompt:

How the agent reorients after a failed query:

How It Works: The Investigation Process

Native AI Chat uses a structured approach to investigations:

Example: “Investigate failed logins from suspicious IPs”

- Observe: “Let me check your authentication logs and IP reputation data”

- Orient: “I found 50 failed logins from 3 high-risk IPs in the last hour”

- Decide: “I should cross-reference these with your user directory and check for successful logins”

- Act: Queries user tables, generates timeline, suggests blocking actions

Getting the Most from Native AI Chat

Effective Prompts

- Be specific about timeframes: “last 24 hours” vs “recently”

- Include context: “high-priority alerts” vs “all alerts”

- Ask follow-up questions: “What caused this spike in DNS queries?”

Investigation Tips

- Start broad, then narrow down: “Show me authentication events” → “Focus on failed logins”

- Ask for explanations: “Why did this detection fire?”

- Request recommendations: “What should I investigate next?”

Related Documentation

Now that you’ve set up your chat settings, explore these related guides:

- Detections, Signals & Alerts Quick Start Guide - Complete setup guide for your detection workflow

- Sigma Streaming - Use Sigma rules for standardized threat detection

- Detection as Code - Manage detections through code and version control

- Notifications - Set up alerting and notification channels

- Agents - Learn how to configure agents

- Model Context Protocol - Advanced AI integration for detection development